MedFalcon v2 40b LoRA - Final

Model Description

This a model release at 1 epoch. For evaluation use only! Limitations:

- Do not use to treat patients! Treat AI content as if you wrote it!!!

Architecture

nmitchko/medfalcon-v2-40b-lora is a large language model LoRa specifically fine-tuned for medical domain tasks.

It is based on Falcon-40b at 40 billion parameters.

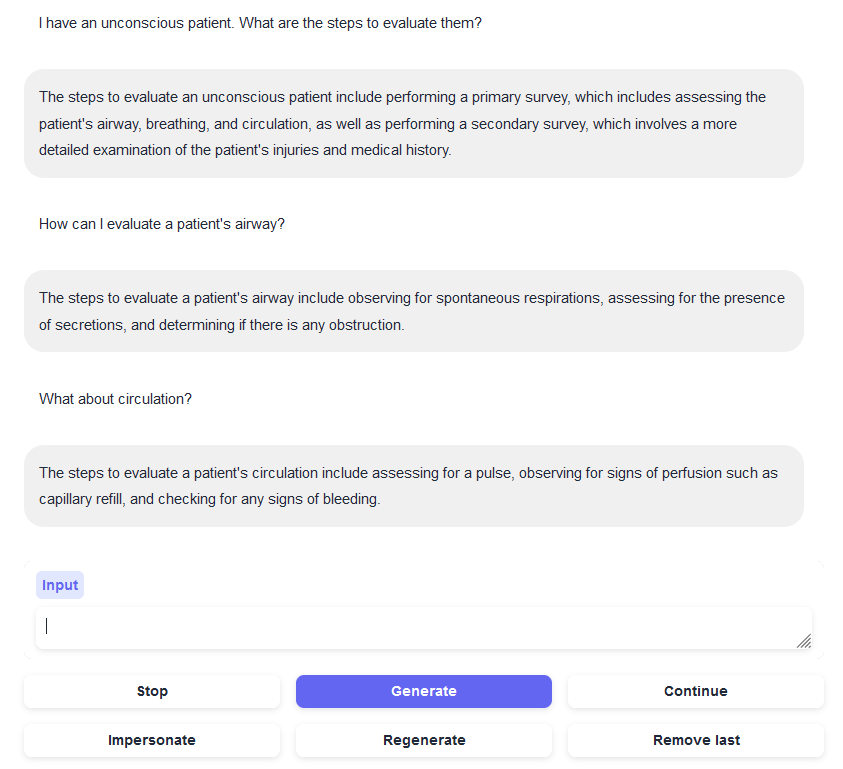

The primary goal of this model is to improve question-answering and medical dialogue tasks. It was trained using LoRA, specifically QLora, to reduce memory footprint.

See Training Parameters for more info This Lora supports 4-bit and 8-bit modes.

Requirements

bitsandbytes>=0.39.0

peft

transformers

Steps to load this model:

- Load base model using transformers

- Apply LoRA using peft

#

from transformers import AutoTokenizer, AutoModelForCausalLM

import transformers

import torch

from peft import PeftModel

model = "tiiuae/falcon-40b"

LoRA = "nmitchko/medfalcon-v2-40b-lora"

# If you want 8 or 4 bit set the appropriate flags

load_8bit = True

tokenizer = AutoTokenizer.from_pretrained(model)

model = AutoModelForCausalLM.from_pretrained(model,

load_in_8bit=load_8bit,

torch_dtype=torch.float16,

trust_remote_code=True,

)

model = PeftModel.from_pretrained(model, LoRA)

pipeline = transformers.pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

device_map="auto",

)

sequences = pipeline(

"What does the drug ceftrioxone do?\nDoctor:",

max_length=200,

do_sample=True,

top_k=40,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

)

for seq in sequences:

print(f"Result: {seq['generated_text']}")

Training Parameters

The model was trained for or 1 epoch on a custom, unreleased dataset named medconcat.

medconcat contains only human generated content and weighs in at over 100MiB of raw text.

| Item | Amount | Units |

|---|---|---|

| LoRA Rank | 64 | ~ |

| LoRA Alpha | 16 | ~ |

| Learning Rate | 1e-4 | SI |

| Dropout | 5 | % |

- Downloads last month

- 12

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.

Model tree for nmitchko/medfalcon-v2-40b-lora

Base model

tiiuae/falcon-40b