Universal AnglE Embedding

Follow us on GitHub: https://github.com/SeanLee97/AnglE.

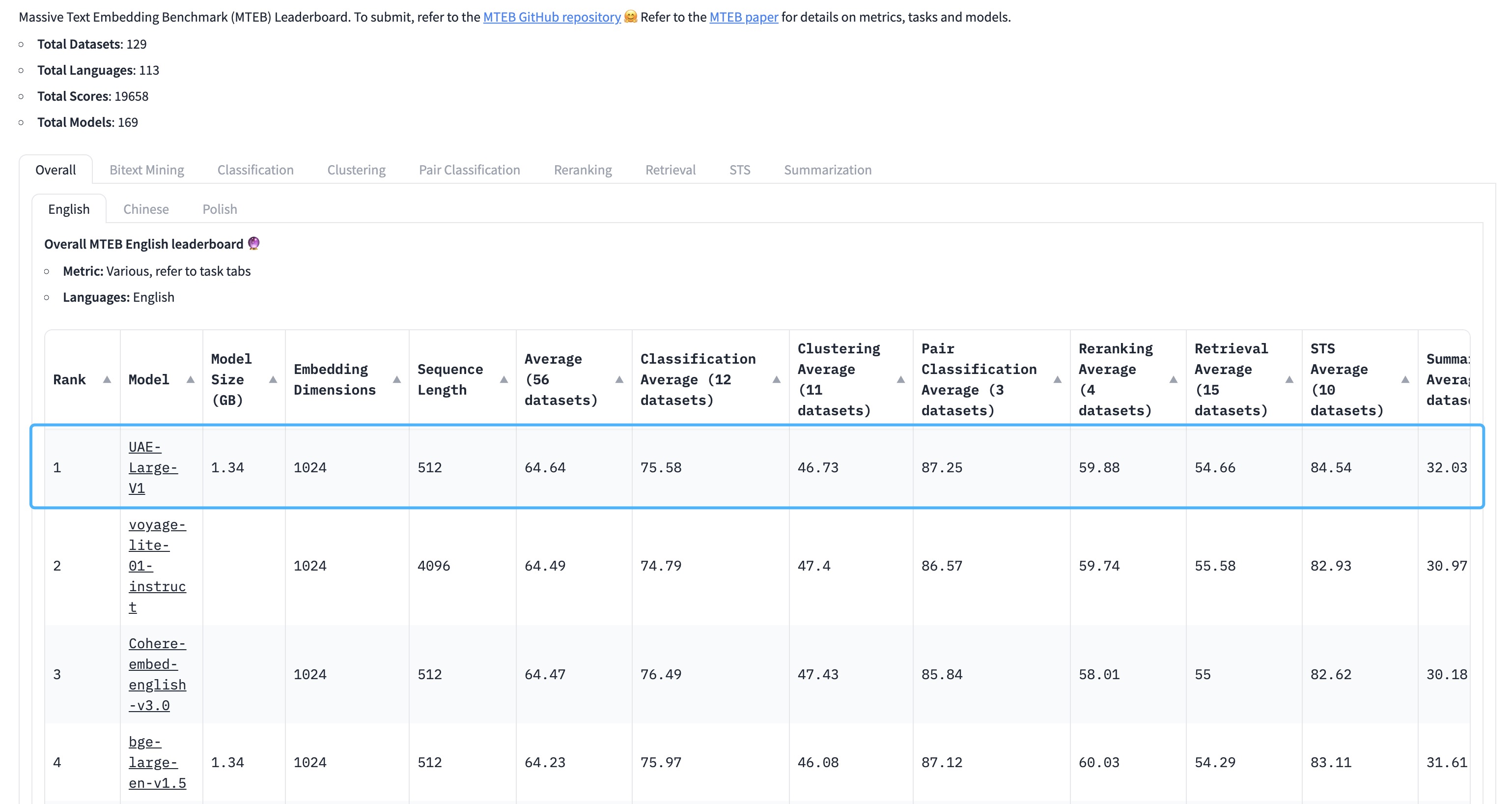

🔥 Our universal English sentence embedding WhereIsAI/UAE-Large-V1 achieves SOTA on the MTEB Leaderboard with an average score of 64.64!

Usage

python -m pip install -U angle-emb

- Non-Retrieval Tasks

from angle_emb import AnglE

angle = AnglE.from_pretrained('WhereIsAI/UAE-Large-V1', pooling_strategy='cls').cuda()

vec = angle.encode('hello world', to_numpy=True)

print(vec)

vecs = angle.encode(['hello world1', 'hello world2'], to_numpy=True)

print(vecs)

- Retrieval Tasks

For retrieval purposes, please use the prompt Prompts.C.

from angle_emb import AnglE, Prompts

angle = AnglE.from_pretrained('WhereIsAI/UAE-Large-V1', pooling_strategy='cls').cuda()

angle.set_prompt(prompt=Prompts.C)

vec = angle.encode({'text': 'hello world'}, to_numpy=True)

print(vec)

vecs = angle.encode([{'text': 'hello world1'}, {'text': 'hello world2'}], to_numpy=True)

print(vecs)

Citation

If you use our pre-trained models, welcome to support us by citing our work:

@article{li2023angle,

title={AnglE-optimized Text Embeddings},

author={Li, Xianming and Li, Jing},

journal={arXiv preprint arXiv:2309.12871},

year={2023}

}

- Downloads last month

- 17

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.

Spaces using odunola/UAE-Large-VI 2

Evaluation results

- accuracy on MTEB AmazonCounterfactualClassification (en)test set self-reported75.552

- ap on MTEB AmazonCounterfactualClassification (en)test set self-reported38.264

- f1 on MTEB AmazonCounterfactualClassification (en)test set self-reported69.410

- accuracy on MTEB AmazonPolarityClassificationtest set self-reported92.843

- ap on MTEB AmazonPolarityClassificationtest set self-reported89.576

- f1 on MTEB AmazonPolarityClassificationtest set self-reported92.826

- accuracy on MTEB AmazonReviewsClassification (en)test set self-reported48.292

- f1 on MTEB AmazonReviewsClassification (en)test set self-reported47.903

- map_at_1 on MTEB ArguAnatest set self-reported42.105

- map_at_10 on MTEB ArguAnatest set self-reported58.181