Fork of t5-11b

This is fork of t5-11b implementing a custom

handler.pyas an example for how to uset5-11bwith inference-endpoints on a single NVIDIA T4.

Model Card for T5 11B - fp16

Use with Inference Endpoints

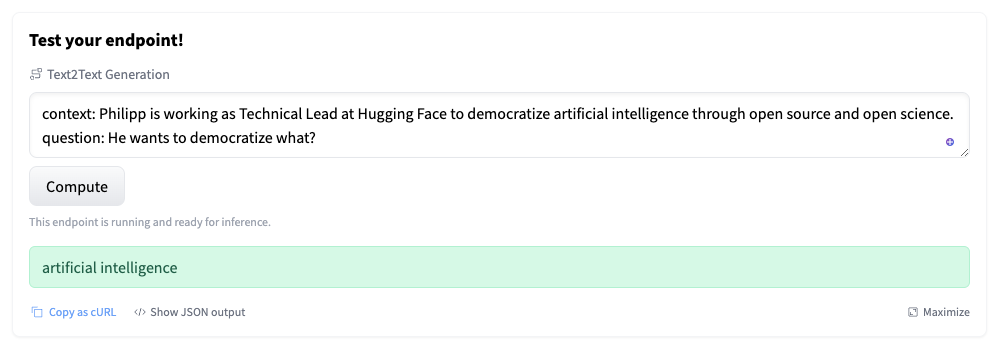

Hugging Face Inference endpoints can be used with an HTTP client in any language. We will use Python and the requests library to send our requests. (make your you have it installed pip install requests)

Send requests with Pyton

import json

import requests as r

ENDPOINT_URL=""# url of your endpoint

HF_TOKEN=""

# payload samples

regular_payload = { "inputs": "translate English to German: The weather is nice today." }

parameter_payload = {

"inputs": "translate English to German: Hello my name is Philipp and I am a Technical Leader at Hugging Face",

"parameters" : {

"max_length": 40,

}

}

# HTTP headers for authorization

headers= {

"Authorization": f"Bearer {HF_TOKEN}",

"Content-Type": "application/json"

}

# send request

response = r.post(ENDPOINT_URL, headers=headers, json=paramter_payload)

generated_text = response.json()

print(generated_text)

- Downloads last month

- 14

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.