metadata

tags:

- bertopic

- summcomparer

- document_text

library_name: bertopic

pipeline_tag: text-classification

inference: false

license: apache-2.0

datasets:

- pszemraj/summcomparer-gauntlet-v0p1

language:

- en

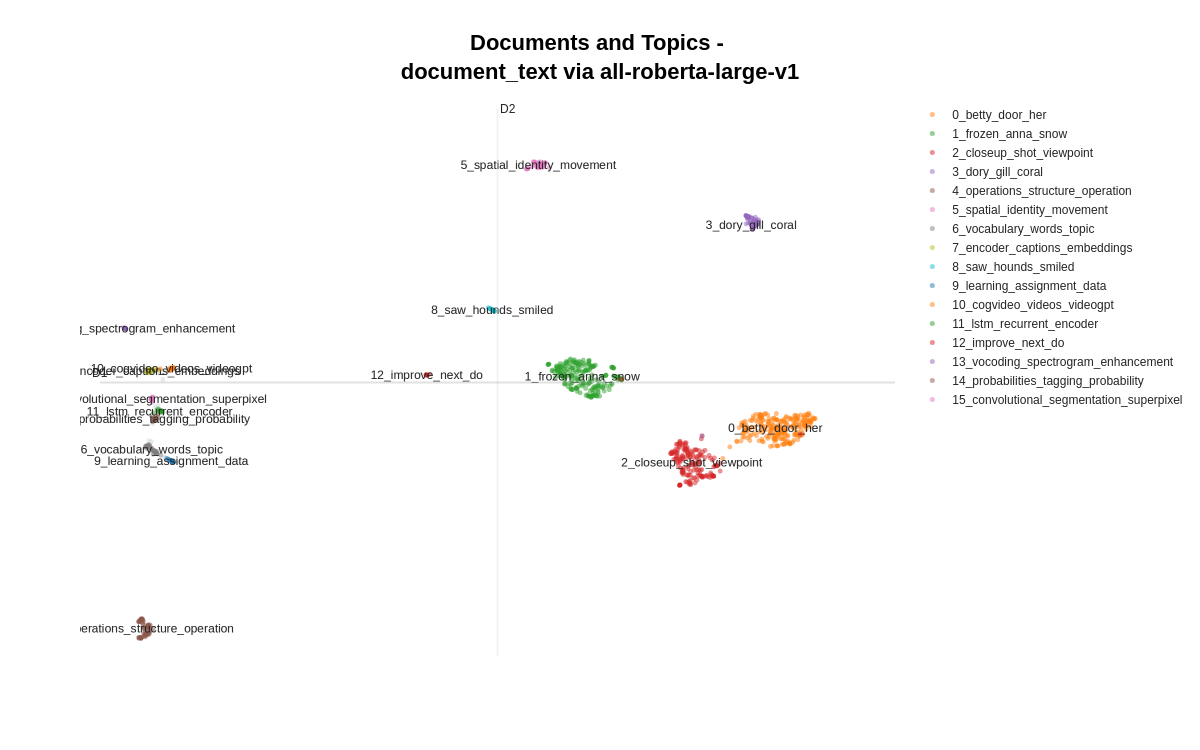

BERTopic-summcomparer-gauntlet-v0p1-all-roberta-large-v1-document_text

This is a BERTopic model. BERTopic is a flexible and modular topic modeling framework that allows for the generation of easily interpretable topics from large datasets.

Usage

To use this model, please install BERTopic:

pip install -U bertopic safetensors

You can use the model as follows:

from bertopic import BERTopic

topic_model = BERTopic.load("pszemraj/BERTopic-summcomparer-gauntlet-v0p1-all-roberta-large-v1-document_text")

topic_model.get_topic_info()

Topic overview

- Number of topics: 17

- Number of training documents: 995

Click here for an overview of all topics.

| Topic ID | Topic Keywords | Topic Frequency | Label |

|---|---|---|---|

| -1 | clustering - convolutional - neural - hierarchical - autoregressive | 11 | -1_clustering_convolutional_neural_hierarchical |

| 0 | betty - door - her - gillis - room | 15 | 0_betty_door_her_gillis |

| 1 | frozen - anna - snow - hans - elsa | 241 | 1_frozen_anna_snow_hans |

| 2 | closeup - shot - viewpoint - umpire - camera | 211 | 2_closeup_shot_viewpoint_umpire |

| 3 | dory - gill - coral - marlin - ocean | 171 | 3_dory_gill_coral_marlin |

| 4 | operations - structure - operation - theory - interpretation | 60 | 4_operations_structure_operation_theory |

| 5 | spatial - identity - movement - identities - noir | 59 | 5_spatial_identity_movement_identities |

| 6 | vocabulary - words - topic - text - topics | 45 | 6_vocabulary_words_topic_text |

| 7 | encoder - captions - embeddings - decoder - caption | 40 | 7_encoder_captions_embeddings_decoder |

| 8 | saw - hounds - smiled - had - hunt | 26 | 8_saw_hounds_smiled_had |

| 9 | learning - assignment - data - research - project | 22 | 9_learning_assignment_data_research |

| 10 | cogvideo - videos - videogpt - video - clips | 21 | 10_cogvideo_videos_videogpt_video |

| 11 | lstm - recurrent - encoder - seq2seq - neural | 18 | 11_lstm_recurrent_encoder_seq2seq |

| 12 | improve - next - do - going - good | 17 | 12_improve_next_do_going |

| 13 | vocoding - spectrogram - enhancement - melspectrogram - audio | 14 | 13_vocoding_spectrogram_enhancement_melspectrogram |

| 14 | probabilities - tagging - probability - words - gram | 12 | 14_probabilities_tagging_probability_words |

| 15 | convolutional - segmentation - superpixel - convolutions - superpixels | 12 | 15_convolutional_segmentation_superpixel_convolutions |

hierarchy

Training hyperparameters

- calculate_probabilities: True

- language: None

- low_memory: False

- min_topic_size: 10

- n_gram_range: (1, 1)

- nr_topics: None

- seed_topic_list: None

- top_n_words: 10

- verbose: True

Framework versions

- Numpy: 1.22.4

- HDBSCAN: 0.8.29

- UMAP: 0.5.3

- Pandas: 1.5.3

- Scikit-Learn: 1.2.2

- Sentence-transformers: 2.2.2

- Transformers: 4.29.2

- Numba: 0.56.4

- Plotly: 5.13.1

- Python: 3.10.11