Phi-3-small-8k-instruct: 6 layers pruned

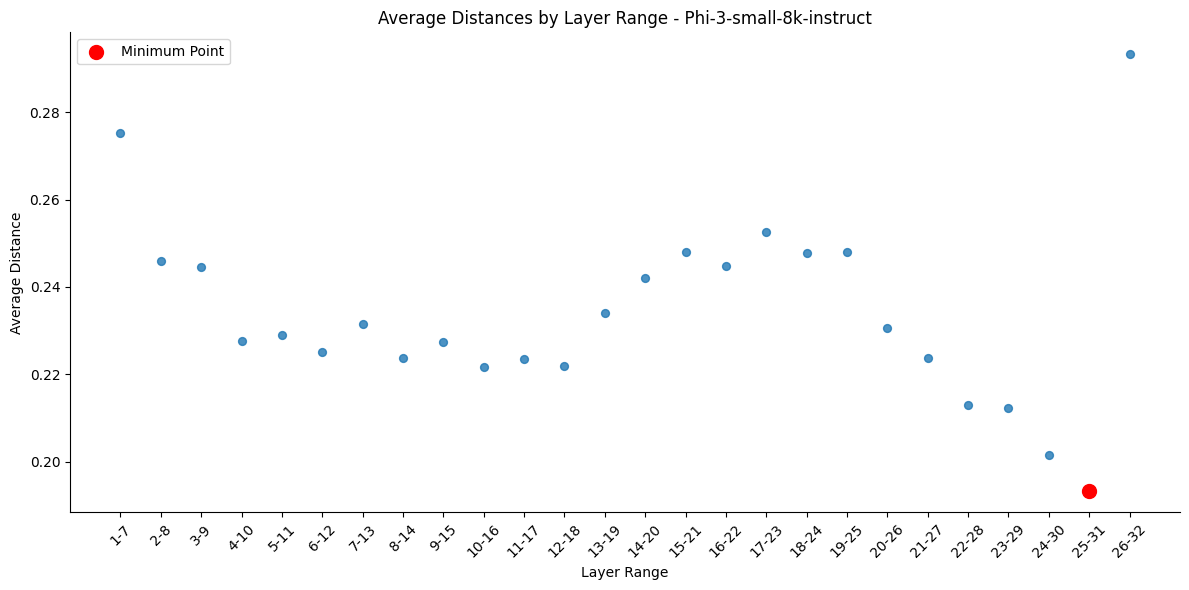

This is a layer-pruned language model created using mergekit. Layers to prune were selected based off of the average distances as follows:

Quick eval

Quick eval for: pszemraj/Phi-3-small-8k-prune6

hf (pretrained=pszemraj/Phi-3-small-8k-prune6,trust_remote_code=True,dtype=bfloat16), gen_kwargs: (None), limit: None, num_fewshot: None, batch_size: 2

| Tasks | Version | Filter | n-shot | Metric | Value | Stderr | |

|---|---|---|---|---|---|---|---|

| arc_easy | 1 | none | 0 | acc | 0.7479 | ± | 0.0089 |

| none | 0 | acc_norm | 0.7125 | ± | 0.0093 | ||

| boolq | 2 | none | 0 | acc | 0.7489 | ± | 0.0076 |

| lambada_openai | 1 | none | 0 | perplexity | 27.3270 | ± | 1.0861 |

| none | 0 | acc | 0.3600 | ± | 0.0067 | ||

| openbookqa | 1 | none | 0 | acc | 0.3360 | ± | 0.0211 |

| none | 0 | acc_norm | 0.4020 | ± | 0.0219 | ||

| piqa | 1 | none | 0 | acc | 0.7182 | ± | 0.0105 |

| none | 0 | acc_norm | 0.7329 | ± | 0.0103 | ||

| winogrande | 1 | none | 0 | acc | 0.7143 | ± | 0.0127 |

Usage

While some further pre-training will be good, it seems capable of generating coherent text as is.

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained(

"microsoft/Phi-3-small-8k-instruct", trust_remote_code=True

)

model = AutoModelForCausalLM.from_pretrained(

"pszemraj/Phi-3-small-8k-prune6", trust_remote_code=True

)

Merge Details

Merge Method

This model was merged using the passthrough merge method.

Models Merged

The following models were included in the merge:

Configuration

The following YAML configuration was used to produce this model:

dtype: bfloat16

merge_method: passthrough

slices:

- sources:

- layer_range: [0, 25]

model: microsoft/Phi-3-small-8k-instruct

- sources:

- layer_range: [31, 32]

model: microsoft/Phi-3-small-8k-instruct

- Downloads last month

- 13

Inference API (serverless) does not yet support model repos that contain custom code.

Model tree for pszemraj/Phi-3-small-8k-prune6

Base model

microsoft/Phi-3-small-8k-instruct