Informative-Drawings-ONNX

This repository contains ONNX models from the paper C. Chan et al., "Informative Drawings: Learning to generate line drawings that convey geometry and semantics", CVPR 2021.

Enviroment

This repositry has been tested with the following environment:

- Ubuntu 22.04

- Python 3.11

- ONNXRuntime 1.19

Usage

Install dependencies:

pip install numpy onnxruntime Pillow

Save this script and run:

import argparse

import time

from contextlib import contextmanager

import numpy as np

import onnxruntime

from PIL import Image

@contextmanager

def timer(name: str):

start = time.perf_counter()

yield

end = time.perf_counter()

print(f"{name}: {end - start:.3f} [sec]")

def preprocess(image: Image.Image, size: int) -> np.ndarray:

image = image.resize((size, size))

image = np.float32(image) / 255.

image = np.transpose(image, (2, 0, 1)) # (w, h, c) -> (c, w, h)

image = np.expand_dims(image, axis=0) # (c, w, h) -> (b, c, w, h)

return image

def postprocess(output: np.ndarray, original_size: tuple[int, int] = None) -> Image.Image:

output_image = np.squeeze(output[0, 0] * 255).astype(np.uint8)

output_image = Image.fromarray(output_image).convert("L")

if original_size:

output_image = output_image.resize(original_size)

return output_image

def main():

parser = argparse.ArgumentParser()

parser.add_argument("--image", type=str, required=True, help="Path to input image")

parser.add_argument("--model", type=str, required=True, help="Path to ONNX model")

args = parser.parse_args()

input_image = Image.open(args.image).convert("RGB")

input_image.thumbnail((1536, 1536))

# Load input image and the model

with timer(f"Load model"):

session = onnxruntime.InferenceSession(args.model)

size = session.get_inputs()[0].shape[2] # width

# Inference

input_data = preprocess(input_image, size)

with timer(f"Inference"):

output_data = session.run(None, {"inputs": input_data})[0]

output_image = postprocess(output_data, input_image.size)

output_image.show()

if __name__ == "__main__":

main()

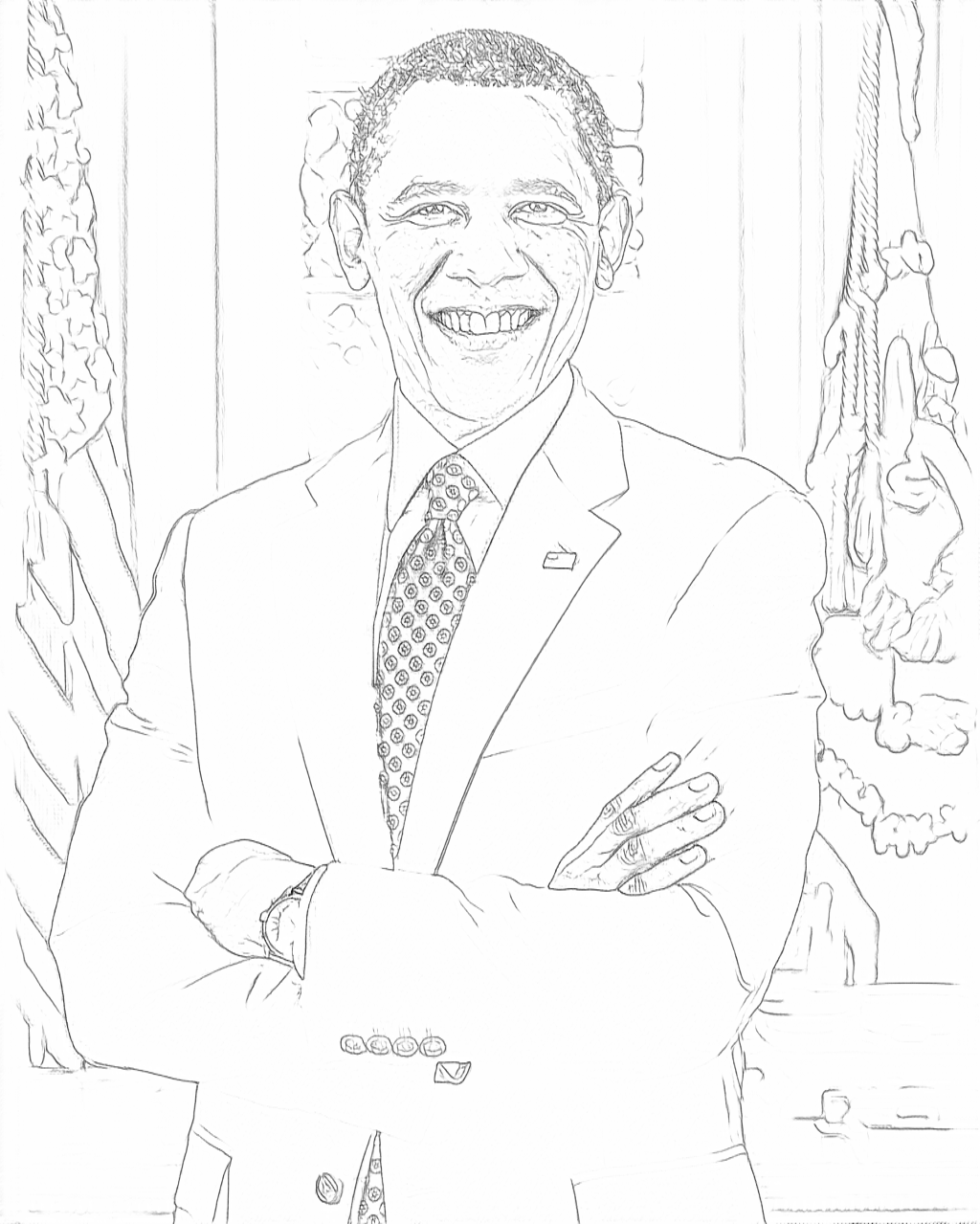

Examples

The larger the image size, the more vivid the conversion results will be. Conversely, the smaller the image size, the stronger the deformation. Please use the size you like.

License

This repository is under MIT License.

Acknowledgement

- The pretrained pth file is from Informative Drawings (Official Repository)

- The input sample is borrowed from Barack Obama - Wikipedia