Spaces:

Runtime error

DensePose Datasets

We summarize the datasets used in various DensePose training schedules and describe different available annotation types.

Table of Contents

General Information

DensePose annotations are typically stored in JSON files. Their structure follows the COCO Data Format, the basic data structure is outlined below:

{

"info": info,

"images": [image],

"annotations": [annotation],

"licenses": [license],

}

info{

"year": int,

"version": str,

"description": str,

"contributor": str,

"url": str,

"date_created": datetime,

}

image{

"id": int,

"width": int,

"height": int,

"file_name": str,

"license": int,

"flickr_url": str,

"coco_url": str,

"date_captured": datetime,

}

license{

"id": int, "name": str, "url": str,

}

DensePose annotations can be of two types: chart-based annotations or continuous surface embeddings annotations. We give more details on each of the two annotation types below.

Chart-based Annotations

These annotations assume a single 3D model which corresponds to

all the instances in a given dataset.

3D model is assumed to be split into charts. Each chart has its own

2D parametrization through inner coordinates U and V, typically

taking values in [0, 1].

Chart-based annotations consist of point-based annotations and segmentation annotations. Point-based annotations specify, for a given image point, which model part it belongs to and what are its coordinates in the corresponding chart. Segmentation annotations specify regions in an image that are occupied by a given part. In some cases, charts associated with point annotations are more detailed than the ones associated with segmentation annotations. In this case we distinguish fine segmentation (associated with points) and coarse segmentation (associated with masks).

Point-based annotations:

dp_x and dp_y: image coordinates of the annotated points along

the horizontal and vertical axes respectively. The coordinates are defined

with respect to the top-left corner of the annotated bounding box and are

normalized assuming the bounding box size to be 256x256;

dp_I: for each point specifies the index of the fine segmentation chart

it belongs to;

dp_U and dp_V: point coordinates on the corresponding chart.

Each fine segmentation part has its own parametrization in terms of chart

coordinates.

Segmentation annotations:

dp_masks: RLE encoded dense masks (dict containing keys counts and size).

The masks are typically of size 256x256, they define segmentation within the

bounding box.

Continuous Surface Embeddings Annotations

Continuous surface embeddings annotations also consist of point-based annotations and segmentation annotations. Point-based annotations establish correspondence between image points and 3D model vertices. Segmentation annotations specify foreground regions for a given instane.

Point-based annotations:

dp_x and dp_y specify image point coordinates the same way as for chart-based

annotations;

dp_vertex gives indices of 3D model vertices, which the annotated image points

correspond to;

ref_model specifies 3D model name.

Segmentation annotations:

Segmentations can either be given by dp_masks field or by segmentation field.

dp_masks: RLE encoded dense masks (dict containing keys counts and size).

The masks are typically of size 256x256, they define segmentation within the

bounding box.

segmentation: polygon-based masks stored as a 2D list

[[x1 y1 x2 y2...],[x1 y1 ...],...] of polygon vertex coordinates in a given

image.

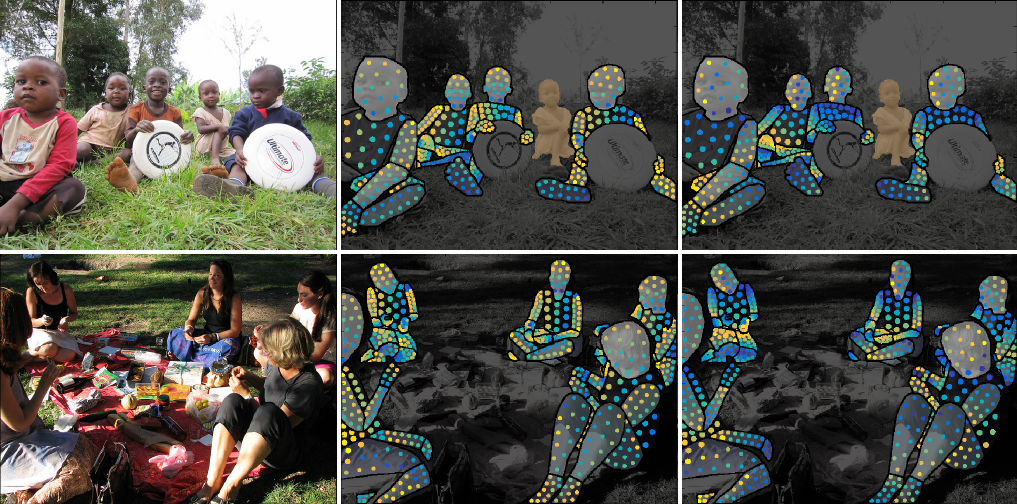

DensePose COCO

Figure 1. Annotation examples from the DensePose COCO dataset.

DensePose COCO dataset contains about 50K annotated persons on images from the COCO dataset The images are available for download from the COCO Dataset download page: train2014, val2014. The details on available annotations and their download links are given below.

Chart-based Annotations

Chart-based DensePose COCO annotations are available for the instances of category

person and correspond to the model shown in Figure 2.

They include dp_x, dp_y, dp_I, dp_U and dp_V fields for annotated points

(~100 points per annotated instance) and dp_masks field, which encodes

coarse segmentation into 14 parts in the following order:

Torso, Right Hand, Left Hand, Left Foot, Right Foot,

Upper Leg Right, Upper Leg Left, Lower Leg Right, Lower Leg Left,

Upper Arm Left, Upper Arm Right, Lower Arm Left, Lower Arm Right,

Head.

Figure 2. Human body charts (fine segmentation) and the associated 14 body parts depicted with rounded rectangles (coarse segmentation).

The dataset splits used in the training schedules are

train2014, valminusminival2014 and minival2014.

train2014 and valminusminival2014 are used for training,

and minival2014 is used for validation.

The table with annotation download links, which summarizes the number of annotated

instances and images for each of the dataset splits is given below:

| Name | # inst | # images | file size | download |

|---|---|---|---|---|

| densepose_train2014 | 39210 | 26437 | 526M | densepose_train2014.json |

| densepose_valminusminival2014 | 7297 | 5984 | 105M | densepose_valminusminival2014.json |

| densepose_minival2014 | 2243 | 1508 | 31M | densepose_minival2014.json |

Continuous Surface Embeddings Annotations

DensePose COCO continuous surface embeddings annotations are available for the instances

of category person. The annotations correspond to the 3D model shown in Figure 2,

and include dp_x, dp_y and dp_vertex and ref_model fields.

All chart-based annotations were also kept for convenience.

As with chart-based annotations, the dataset splits used in the training schedules are

train2014, valminusminival2014 and minival2014.

train2014 and valminusminival2014 are used for training,

and minival2014 is used for validation.

The table with annotation download links, which summarizes the number of annotated

instances and images for each of the dataset splits is given below:

| Name | # inst | # images | file size | download |

|---|---|---|---|---|

| densepose_train2014_cse | 39210 | 26437 | 554M | densepose_train2014_cse.json |

| densepose_valminusminival2014_cse | 7297 | 5984 | 110M | densepose_valminusminival2014_cse.json |

| densepose_minival2014_cse | 2243 | 1508 | 32M | densepose_minival2014_cse.json |

DensePose PoseTrack

Figure 3. Annotation examples from the PoseTrack dataset.

DensePose PoseTrack dataset contains annotated image sequences. To download the images for this dataset, please follow the instructions from the PoseTrack Download Page.

Chart-based Annotations

Chart-based DensePose PoseTrack annotations are available for the instances with category

person and correspond to the model shown in Figure 2.

They include dp_x, dp_y, dp_I, dp_U and dp_V fields for annotated points

(~100 points per annotated instance) and dp_masks field, which encodes

coarse segmentation into the same 14 parts as in DensePose COCO.

The dataset splits used in the training schedules are

posetrack_train2017 (train set) and posetrack_val2017 (validation set).

The table with annotation download links, which summarizes the number of annotated

instances, instance tracks and images for the dataset splits is given below:

| Name | # inst | # images | # tracks | file size | download |

|---|---|---|---|---|---|

| densepose_posetrack_train2017 | 8274 | 1680 | 36 | 118M | densepose_posetrack_train2017.json |

| densepose_posetrack_val2017 | 4753 | 782 | 46 | 59M | densepose_posetrack_val2017.json |

DensePose Chimps

Figure 4. Example images from the DensePose Chimps dataset.

DensePose Chimps dataset contains annotated images of chimpanzees.

To download the images for this dataset, please use the URL specified in

image_url field in the annotations.

Chart-based Annotations

Chart-based DensePose Chimps annotations correspond to the human model shown in Figure 2,

the instances are thus annotated to belong to the person category.

They include dp_x, dp_y, dp_I, dp_U and dp_V fields for annotated points

(~3 points per annotated instance) and dp_masks field, which encodes

foreground mask in RLE format.

Chart-base DensePose Chimps annotations are used for validation only. The table with annotation download link, which summarizes the number of annotated instances and images is given below:

| Name | # inst | # images | file size | download |

|---|---|---|---|---|

| densepose_chimps | 930 | 654 | 6M | densepose_chimps_full_v2.json |

Continuous Surface Embeddings Annotations

Continuous surface embeddings annotations for DensePose Chimps

include dp_x, dp_y and dp_vertex point-based annotations

(~3 points per annotated instance), dp_masks field with the same

contents as for chart-based annotations and ref_model field

which refers to a chimpanzee 3D model chimp_5029.

The dataset is split into training and validation subsets. The table with annotation download links, which summarizes the number of annotated instances and images for each of the dataset splits is given below:

The table below outlines the dataset splits:

| Name | # inst | # images | file size | download |

|---|---|---|---|---|

| densepose_chimps_cse_train | 500 | 350 | 3M | densepose_chimps_cse_train.json |

| densepose_chimps_cse_val | 430 | 304 | 3M | densepose_chimps_cse_val.json |

DensePose LVIS

Figure 5. Example images from the DensePose LVIS dataset.

DensePose LVIS dataset contains segmentation and DensePose annotations for animals on images from the LVIS dataset. The images are available for download through the links: train2017, val2017.

Continuous Surface Embeddings Annotations

Continuous surface embeddings (CSE) annotations for DensePose LVIS

include dp_x, dp_y and dp_vertex point-based annotations

(~3 points per annotated instance) and a ref_model field

which refers to a 3D model that corresponds to the instance.

Instances from 9 animal categories were annotated with CSE DensePose data:

bear, cow, cat, dog, elephant, giraffe, horse, sheep and zebra.

Foreground masks are available from instance segmentation annotations

(segmentation field) in polygon format, they are stored as a 2D list

[[x1 y1 x2 y2...],[x1 y1 ...],...].

We used two datasets, each constising of one training (train)

and validation (val) subsets: the first one (ds1)

was used in Neverova et al, 2020.

The second one (ds2), was used in Neverova et al, 2021.

The summary of the available datasets is given below:

| All Data | Selected Animals (9 categories) |

File | ||||||

|---|---|---|---|---|---|---|---|---|

| Name | # cat | # img | # segm | # img | # segm | # dp | size | download |

| ds1_train | 556 | 4141 | 23985 | 4141 | 9472 | 5184 | 46M | densepose_lvis_v1_ds1_train_v1.json |

| ds1_val | 251 | 571 | 3281 | 571 | 1537 | 1036 | 5M | densepose_lvis_v1_ds1_val_v1.json |

| ds2_train | 1203 | 99388 | 1270141 | 13746 | 46964 | 18932 | 1051M | densepose_lvis_v1_ds2_train_v1.json |

| ds2_val | 9 | 2690 | 9155 | 2690 | 9155 | 3604 | 24M | densepose_lvis_v1_ds2_val_v1.json |

Legend:

#cat - number of categories in the dataset for which annotations are available;

#img - number of images with annotations in the dataset;

#segm - number of segmentation annotations;

#dp - number of DensePose annotations.

Important Notes:

The reference models used for

ds1_trainandds1_valarebear_4936,cow_5002,cat_5001,dog_5002,elephant_5002,giraffe_5002,horse_5004,sheep_5004andzebra_5002. The reference models used fords2_trainandds2_valarebear_4936,cow_5002,cat_7466,dog_7466,elephant_5002,giraffe_5002,horse_5004,sheep_5004andzebra_5002. So reference models for categoriescatainddogare different fords1andds2.Some annotations from

ds1_trainare reused inds2_train(4538 DensePose annotations and 21275 segmentation annotations). The ones for cat and dog categories were remapped fromcat_5001anddog_5002reference models used inds1tocat_7466anddog_7466used inds2.All annotations from

ds1_valare included intods2_valafter the remapping procedure mentioned in note 2.Some annotations from

ds1_trainare part ofds2_val(646 DensePose annotations and 1225 segmentation annotations). Thus one should not train onds1_trainif evaluating onds2_val.